I am a well known supporter (a.k.a. “fan”) of exact optimization. Still, I spent the whole 90 minutes master level class in operations research on heuristics this week (also last week, actually). And I think this is exactly right so. Here is why.

Last week we were discussing heuristic methods for solving specific optimization problems. Heuristics do not come with any guarantee that they find good solutions or that they are fast, there is often not even a guarantee that they find a solution at all. In practice, however, there are good reasons for using heuristics: typically, they are very intuitive, thus easy to explain, and even better: easy to understand. Deciders feel comfortable with them as they often reflect common sense. They are easy to implement, even in spreadsheets. And: often enough they work well when applied on practical data (there are many exceptions to this rule but we will speak about this in a later post).

We distinguish between construction heuristics which build solutions from scratch, and improvement heuristics which assume a solution already given and try to find better ones. The nearest neighbor heuristic for TSP (a greedy heuristic) is an example for the former, a 2-opt heuristic is an example for the latter. In 2-opt for TSP you keep on removing two edges of a tour and adding the two other edges completing the tour again, if this reduces the tour length. (Pro-tip by Torsten Koch: never ever deliver to a practitioner a solution to any optimization problem that is not 2-opt—nothing is as bad as the problem owner looking at your work and spotting an improvement immediately. Blow!)

In this week’s class we spoke about meta-heuristics. These are more general concepts that work on many kinds of optimization problems, not just tailored to one application. A popular idea is local search. Its essence: start from a solution (the incumbent), consider a neighborhood, that is, solutions that are “similar” to the incumbent, evaluate their objective function values, and chose a best one as the next incumbent—this is called a move; then iterate (I omit the many variants). The following video shows a local search in action for a practical stacking problem at a steel plant.

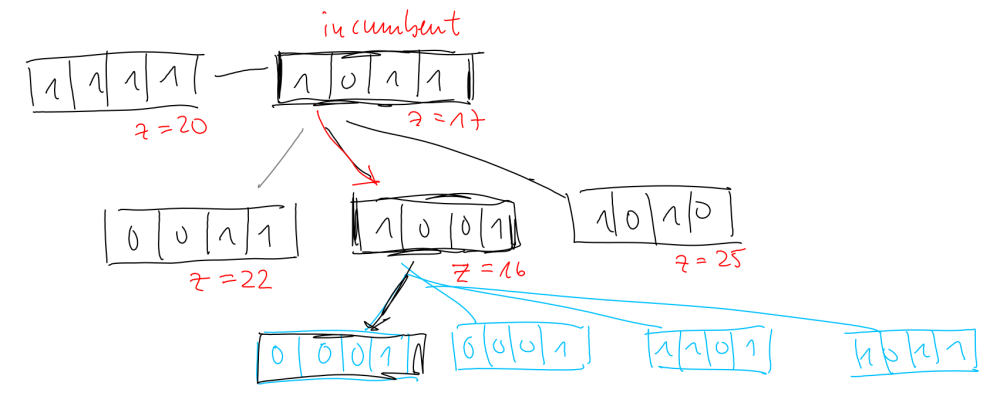

There are three core ingredients: (a) we need to define the representation of a solution, and (b) we must think about a neighborhood. Concerning the representation, ask: what defines a solution? If you know some integer programming, the question is similar to defining the meaning of variables. For the knapsack problem (and many others) a solution could be represented (or “encoded”) by a vector of bits, that is, of zeros and ones (like in the drawing at the top of this post). A zero at position i means “the i-th item is not packed” wheres a one translates to “the i-th item is packed” in this solution. Similar solutions could be those which arise when we drop exactly one of the packed items, or pack one of those so far left unpacked. That defines a neighborhood—of course only one out of many possible; we could add/drop more items, etc. Here is a sketch for flipping one bit:

The third ingredient is (c) the evaluation of the objective function of a solution (values z in the sketch). This may be very easy (e.g., when you can formulate your problem as a mathematical program, you only need to evaluate the objective function), but it may be very costly at times (e.g., when a complex simulation has to be run to assess consequences of the decisions encoded in your solution; I have seen this e.g. in public tranport planning).

The third ingredient is (c) the evaluation of the objective function of a solution (values z in the sketch). This may be very easy (e.g., when you can formulate your problem as a mathematical program, you only need to evaluate the objective function), but it may be very costly at times (e.g., when a complex simulation has to be run to assess consequences of the decisions encoded in your solution; I have seen this e.g. in public tranport planning).

I am sure that if you know a little bit of programming you can see the code already before your eyes—implementing a local search is really not difficult. Note: the larger the neighborhood, the higher the improvement potential, but the larger the computational burden to search the neighborhood; there is no free lunch.

You may also see problems that can arise. When searching the neighborhood does not yield an improving solution, you have reached a local optimum—and in general there is no guarantee that it is globally optimal. A way to escape this is to allow non-improving, even worsening moves, maybe only for a while or with a certain probability (in simulated annealing we start with a high probability for accepting a worsening move; in the course of the algorithm this probability is decreasing—the system cools down, hence the name of this variant). You may have recognized that non-improving moves entail the danger of cycling: revisits to solutions we have explored before. This can be avoided by listing such solutions (or those with other unwanted properties) in a tabu list; this is where tabu search got its name from.

There are many more meta-heuristics, often they immitate some phenomenon we observe in nature. One such phenomenon is Darwin’s survival of the fittest. In genetic or evolutionary algorithms we maintain a whole set of solutions (individuals, they form the population), from which new solutions (offsprings) are derived by e.g., recombining the encodings of two solutions (crossover) or random bit flips (mutation). We evaluate the fitness (objective function value or other desirable properties) of the grown population and (sic!) keep only the fittest (the next generation) so that population size stays constant. Crossover of two individuals could mean: keep only those properties that both individuals possess, or at least one individual possesses. Like in nature, it is advisable to start with a population that somehow represents the whole spectrum of the genetic material, e.g. with a set of solutions each of which is good at only one property (or in multi-objective optimization: which is good for only one objective function). Producing the next generation, we follow two essential ideas: diversification (solutions should not be too similar) and intensification (solution properties that appear often in fit individuals should be more likely to appear in offsprings).

All the above ideas have an impact also within exact algorithms. After all, a user of branch-and-bound in practice is interested in obtaining solutions quickly. There has been a surge of research in primal heuristics within B&B in the last 10+ years, applying ideas like local search, intensification, diversification, evolutionary algorithms, etc. to solutions of a mathematical program. Remember: B&B stays exact, but heuristics help to speed up the search and satisfy the practitioner. Of course, every exact method can be turned into a heuristic by terminating prematurely, i.e., by setting a time or node limit (or “large” optimality gap) in B&B. This is popular in practice.

On the other hand, exact algorithms can help within heuristics; such hybrids are called matheuristics. One idea is to use an integer program to search a very large scale neighborhood (VLSN). Imagine our bit representation of a solution, say, a packing of a knapsack, and the neighborhood allows to flip 50% of the bits. Even only for 100 items, each neighborhood contains up to solutions—impossible to efficiently enumerate. Still, this can be done by adding the following constraint to the knapsack integer program:

where contains the indices of variables at value 0, and

those a value 1 in the incumbent solution. The left side of the inequality “counts” the number of bit flips and this number must not exceed the given limit. Try it out yourself, this integer program solves quickly, and finds a best neighbor!

Finally, heuristic ideas play a role already when modeling your problem or processing your input data. When looking for a vehicle tour in Germany, is it really necessary to consider all the connections in your model, even those edges of 775km length from Hamburg to Munich where you can be almost sure that no vehicle will drive this distance directly? No. Are you facing a facility location problem with thousands of customers or packing problems with millions of items? Group customers or items and decide only about these clusters. Preprocess your data when it is too big, eliminate what is supportedly highly unlikely to play a role in a good solution, but don’t be too strict, for otherwise you may miss good quality solutions. As always: there is a tradeoff between computation time and solution quality and you must find a good balance yourself. But it would be a waste not to try.

Good luck!

Reblogged this on israeldaviiid.

LikeLike

Hi Marco–

A great article, thanks for sharing. I’ll mention one more advantage of heuristics, and that is that they tend to find solutions in the face of highly nonlinear objectives. This is an important feature in cases where a good solution is acceptable in the face of a rather exotic objective function.

George Danner

Business Laboratory

Houston, TX USA

LikeLiked by 1 person

Thanks George, I appreciate your comment. You are completely right, this is a very important application of heuristics. This may also apply to situations with very complicated constraints which are hard (or “impossible”) to model exactly.

LikeLike

¡A great summary! Just a remark on Multi-Objective Optimization to clarify, if it wasn’t obvious, that the objective function is multi-valued (when properly speaking). A property, is, of course, a special case of function of the simplest kind: a single-valued function.

I specially liked how you related exact methods, heuristics and matheuristics. The only element missing there is, perhaps, hyperheuristics.

LikeLike

Thank you, Alfonso, in particular for bringing up hyperheuristics. I don’t have any first hand experience on this, so it escaped me, but it appears to be a natural concept. I also like that this easily combines with ideas from machine learning, an area where I see a lot of interesting stuff to dig into in the future!

LikeLike